While crossfire between the US presidential candidates continues to fly on the national stage, the social-sector measurement community continues to engage in a heated debate of its own.

This debate has revolved around the benefits and drawbacks of randomized control trials (RCTs) as the preferred study design for proving social impact. This back-and-forth, which has unfolded over the past decade at conferences and online forums, has resulted in real consequences for how nonprofits and funders approach their measurement work.

At our organization, One Acre Fund, we’ve found that RCTs can be a powerful and appropriate tool under certain conditions and for certain audiences. But as practitioners charged with maximizing impact for our clients, we often wonder whether our sector would be much better served by a new debate—one centered much more explicitly on improving impact.

Specifically, the social sector needs to coalesce around the common questions direct-service nonprofits ought to answer about their impact so that they can maximize learning and action around their program models. Put another way: What common insights would allow nonprofit leaders to make decisions that generate more social good for the clients they serve?

In part to answer this question, we recently undertook a comprehensive review of all field studies and research from our organization’s first decade of operation, and found four main categories of questions that drove the greatest learning, action, and impact improvement. We’ve ranked these below by difficulty-level (low to high), although within each, there is considerable room for increased measurement rigor to enhance the quality and utility of the findings.

1. Impact drivers: Are there particular conditions or program components that disproportionately drive results? For nonprofits, especially multi-service organizations, studying the impact (and cost) of discrete conditions and components can lead to meaningful program redesign and improvement.

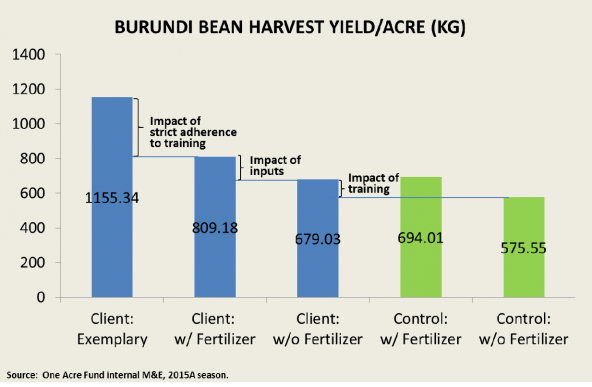

One Acre Fund provides a bundle of services—most importantly farm financing, input delivery, and agricultural training—so we seek to understand whether the whole is greater than the sum of the parts. For instance, the chart below shows 2015 bean harvest levels of five farmer types in Burundi. Those who accessed fertilizer (through One Acre Fund financing and delivery) and strictly adhered to our trainings saw the strongest impact on their bean yields (and, when factoring in cost, the highest social return on their investment) of all program permutations.

We then analyzed the specific components of our training program, finding that two practices—correct fertilizer dosing and seed spacing—were by far the largest predictors of improved yields. We also found that these two practices had relatively low compliance rates. As a result, we introduced simple technologies—fertilizer scoops and pre-measured string for seed spacing—to make it easier for clients to follow our advice.

2. Impact distribution: Does the program generate better results for a particular sub-group? Most organizations, including ours, primarily measure and report on average (mean) performance, but this doesn’t tell you whether a small set of “super-performers” are masking the relatively weaker impact achieved by the rest. We’ve tackled this question in two ways.

First, we calculate and compare median results with means. To date, we’ve found that median impacts across our program studies are quite close to our means, with a normal (not skewed) impact distribution curve. Second, we recently performed an in-depth analysis of yield impact by sub-group, looking for differences based on household size, education level, age, gender, and wealth. We found the most consistent differences in our age analysis, with stronger impacts among our older clients. This is prompting us to do more research on how we can attract, retain, and better serve younger farmers.

3. Impact persistence: How does a given client’s impact change over time? A client’s longitudinal impact provides another rich source for impact improvement. Our studies in this area show longer-tenured clients achieve higher average yields per acre, devote more acreage to our program, and purchase higher numbers of life-improving “add-on” products, such as solar lights and cookstoves. Taken together, they achieve higher impact in our program and higher monthly household expenditures post-program. Further, preliminary research suggests clients who leave our program continue to see better yields than farmers who have never joined, but only at one-fourth of the incremental impact of farmers who stay in our program.

We believe these findings validate our long-term commitments to the communities we serve and suggest that we can improve impact through greater emphasis on client retention. Therefore, we are investigating new pricing strategies, package configurations, and add-on products to help longer-tenured clients continue climbing the ladder out of poverty.

4. Impact externalities: What are the positive and negative effects on the people and communities not directly accessing the program? Few nonprofit programs occur in a vacuum; hence, understanding externalities— whether intended or accidental—is crucial for impact improvement. At One Acre Fund, for instance, a plausible positive spillover could be organic observation and adoption of beneficial farming practices by un-enrolled neighbors. Indeed, our research suggests Kenyan neighbors in areas of long program tenure and high program penetration produced an extra 45 kilograms of maize per year—an extra month of food for a family. This finding suggests “inward-growth” (adding farmers in existing, densely populated areas) provides an impact boost for communities.

Equally important, additional food supply from One Acre Fund farmers could plausibly reduce village-level crop prices for all farmers. So far, we’ve not found such negative equilibrium effects of our core program, most likely because our farmers consume the majority of the excess they produce. However, we did find some market effects in a trial in Kenya, in which we offered loans to One Acre Fund farmers to store their maize. This program was meant to delay harvest sales until later in the season, when farmers could achieve higher prices, but in markets where loan take-up was high (and many farmers followed the same selling pattern), there was a reduction in seasonal price dispersion, blunting the program’s impact for One Acre Fund farmers, but improving revenues of un-enrolled farmers and stabilizing prices for the community.

Our comprehensive review identified these four questions as particularly fertile sources of learning. There may well be others, and we are eager for more “measurement mindshare” to debating which ones matter most.

We also see a crucial role for funders to play. Instead of due diligence conversations and reporting templates focused largely on the strength of study design, we envision a more-encompassing dialogue around what organizations have learned from their past measurements and what actions they took as a result. Similarly, funders might use the four questions to help a nonprofit assess whether it understands enough about its model and has sufficiently improved it over time to even subject it to a rigorous study.

At end of the day, we strongly believe that if nonprofits focused on answering and adjusting their models based on these questions, rather than obsessing over how they will prove their impact, we’d see a considerable boost in the social good our sector creates.

Matthew Forti is managing director of One Acre Fund USA (@OneAcreFund), where he leads the organization’s work outside of Africa, and helps oversee its measurement and evaluation function. Forti is also advisor to the performance measurement capability area at the Bridgespan Group (@BridgespanGroup), an advisory firm to mission-driven leaders and organizations.

Kim Siegal is the global M&E director of One Acre Fund (@OneAcreFund), where she has led the team since 2013. Prior to that she worked as a senior project manager for Innovations for Poverty Action and as a senior analyst for the US Government Accountability Office. She is currently based in Kigali, Rwanda.